I found more information on panning, and one of the things I found is that there is a "panning rule" or "panning law", which is defined in wikipedia as follows:

The pan control or pan pot (panoramic potentiometer) has an internal architecture which determines how much of each source signal is sent to the two buses that are fed by the pan control. The power curve is called taper or law. Pan control law might be designed to send -4.5 dB to each bus when centered or 'straight up' or it might have -6 dB or -3 dB in the center. If the two output buses are further combined to make a mono signal, then a pan control law of -6 dB is optimum. If the two output buses are to remain stereo then a pan control law of -3 dB is optimum. Pan control law of -4.5 dB is a compromise between the two.

[SOURCE:

Audio Panning (wikipedia) ]

But the most intriguing bit of information is that while a single panning knob actually does panning for a monaural track, when there is a single knob for a stereo track it is doing

balancing rather than panning, which explains a few of things I have noticed in my experiments . . .

The balance control takes a stereo source and varies the relative level of the two channels. The left channel will never come out of the right speaker by the action of a balance control. A pan control can send the left channel to either the left or the right speakers or anywhere in between. Note that mixers which have stereo input channels controlled by a single pan pot are in fact using the balance control architecture in those channels, not pan control.

[SOURCE:

Audio Panning (wikipedia) ]

Balance can mean the amount of signal from each channel reproduced in a stereo audio recording. Typically, a balance control in its center position will have 0 dB of gain for both channels and will attenuate one channel as the control is turned, leaving the other channel at 0 dB.

[SOURCE:

Stereophonic Balance (wikipedia) ]

In some respects, I suppose this might be obvious to everyone on the planet except me, but if the "panning" control for a stereo track in Digital Performer 7 and Notion 3 actually is controlling stereophonic balance, then I would call it a "balancing" control rather than a "panning" control . . .

I need to do some experiments to verify what is happening, but I think that the "panning" controls in Digital Performer 7 actually are

balance controls, while it is likely that the "panning" controls in Notion 3 in fact are panning controls . . .

The Notion 3 panning control has three dots, which distinguishes it from the more simplistic "panning" control in Digital Performer 7, where in the Notion 3 Mixer the leftmost dot normally is "left" and the rightmost dot normally is "right", but you can reverse the "left" and "right" dots, at which time the background color changes to pink or red as a visual indicator that you have reversed the locations, and this tends to suggest that the Notion 3 "panning" control is a hybrid control which has two functionally separate individual channel "pan" controls (represented visually by the outermost dots), which is a very elegant way to represent the underlying algorithms and functionality . . .

And this maps to needing to do yet another set of experiments, which I think will be interesting . . .

Based on what I have discovered so far, it appears likely that the stereo track "panning" controls in Digital Performer 7 are following a "pan rule", even though they actually are balance controls, but which "pan rule" they follow is something I need to research, although this tends to confirm the logarithmic taper hypothesis . . .

It is possible that the panning controls for stereo tracks in Notion 3 also follow a "pan rule", but the specific details are not so clear, and in some respects the documentation is a bit counterintuitive to what I have observed with headphone listening . . .

Immediately above the fader are controls for panning (specifying the left-right placement of the instrument in stereo). With the stereo sounds of NOTION3, you have two dimensions to specify: left/right placement and “width” of the sound.

You drag the L dot (for left speaker) and/or the R dot (for the right speaker) to specify the sonic placement of the instrument in a stereo field. The further away you want the instrument to sound, the closer you bring the L and R dots together anywhere across the axis. The default placement (left stereo channel far left and right stereo channel far right) is optimum for a close- to medium-mic’d sounds.

[SOURCE: "Notion 3 User Guide" (Notion Music) ]

The counterintuitive aspect when listening with headphones is that when I want to place an instrument at top-center in the front (proximal) rather than in the back (distal), I move the L and R dots very close together and center them in the middle, where {-0.1 pan L, +0.1 pan R} is a very tight proximal panning location setting . . .

For example, the synthesizer bass in "Sparkles" (The Surf Whammys) has a panning setting of {-0.3 pan L, +0.3 pan R}, and it does

not sound far away, really . . .

"Sparkles" (The Surf Whammys) -- MP3 (4.1MB, 291-kbps [VBR], approximately 1 minute and 55 seconds)Really!I have the Panorama 5 (Wave Arts) VST plug-in, and it does very realistic 3D audio imaging when you listen with headphones, and it also has algorithms for 3D audio imaging for loudspeaker listening, but it uses advanced reverberation, echo, and phase algorithms that require a

lot of space or headroom in the mix, since it uses reverberation, reflection (which affects phase), and echos as distance and angle cues, so it is

not so effective when there are a lot of instruments . . .

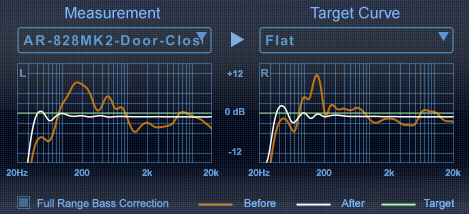

As you can see in the user-interface for Panorama 5 (

below), there are a lot of parameters that affect the perceived location of a sound, which is one of the reasons that Panorama 5 is such an advanced 3D audio effect, and it also is the reason that Panorama 5 is a

very "heavy" VST plug-in with respect to processing requirements, since it uses elaborate mathematical algorithms to adjust the various distance and angle cues for a sound . . .

Panorama 5 (Wave Arts) ~ 3D Audio Imaging VST Plug-in

Panorama 5 (Wave Arts) ~ 3D Audio Imaging VST Plug-inFor purposes of "sparkling", the general goal is to avoid needing to use what essentially are binaural effects, which I think is possible to some degree and is the reason for the current foray into panning, balancing, and so forth and so on, since with Notion 3 I can control the notes of a "sparkled" instrument very precisely, which includes being able to control the pitch of notes very precisely, because in the "sparkling" technique (a) I can put a note on any of the perhaps 8 staves or clefs and (b) I can control the pitch of the note by virtue of composing it very specifically for this purpose . . .

Binaural Recording (wikipedia)In other words, if a note needs to be a specific pitch at a particular panning location to be perceived in a certain location, then this is not difficult to do in Notion 3 so long as (a) there is a rule for it and (b) I can discover the rule, since (c) I can compose to the rule . . .

I tend to do everything "by ear", which works nicely most of the time, but "sparkling" involves creating and manipulating auditory illusions, and one of the realities of auditory illusions is that by definition you cannot trust what you hear, since the general fact of auditory illusions is that what you hear is very different from what actually is happening with the audio, hence it helps to know the applicable rules . . .

Auditory Illusions (wikipedia)Explained another way, knowing the rules for an auditory illusion makes it possible to create and to control the auditory illusion by adjusting and setting various parameters using a mathematical formula rather than "by ear" . . .

Once the auditory illusion is created and is working correctly, you can verify it "by ear", but you need to know the underlying rules to make it happen . . .

And in the "always trust your ears" department, it is nice to have verified that my hypothesis about "panning" having a logarithmic aspect, which is fabulous . . .

Fabulous!

P. S. From a different perspective, about two years ago I realized that I needed to focus on the producing, recording, mixing, and mastering aspects of digital audio production, which is entirely different from the focus of composing, playing instruments, and singing, although it includes a bit of arranging work, since (a) arranging is a key aspect of producing and (b) arranging involves managing and coordinating instrumental and vocal parts so that they do not compete for the same sonic spaces, which is yet another new area of detailed focus here in the sound isolation, where the general rule is that each instrument and vocal parts needs to have its own unique space within the mix . . .

And I have better understanding of the vast importance of what George Martin did when he produced the Beatles . . .

The Beatles were excellent composers, musicians, and singers, but George Martin made them sound good on records, for sure . . .

For sure!